Part II of a presentation on data quality presented by David Tussey, formerly of the NYC Department of Information Technology and Telecommunications and Jun Yan, professor of statistics from the University of Connecticut. Part I focused on how to undertake a data cleansing effort.

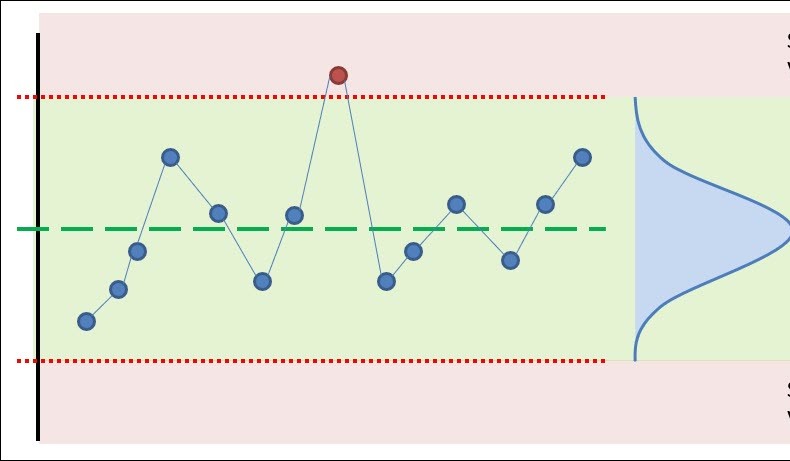

This presentation focuses on measuring data quality over time. In this presentation we will examine the challenges of measuring data quality over time; essentially attempting to answer the question “Is my data getting better or worse?”. We discuss some data quality measures, how to capture and visualize these over time, and how to detect deviations in data quality using a process known as statistical process control. We will demonstrate the framework we propose with real-time demonstration of data quality scripts executed against the 311 SR dataset.

At the end, we hope to illustrate both issues and a potential solution for measuring the changes in data quality over time.